How does an LLM Work?

The 7 Steps: How Your Large Language Model Prompt Becomes an Answer

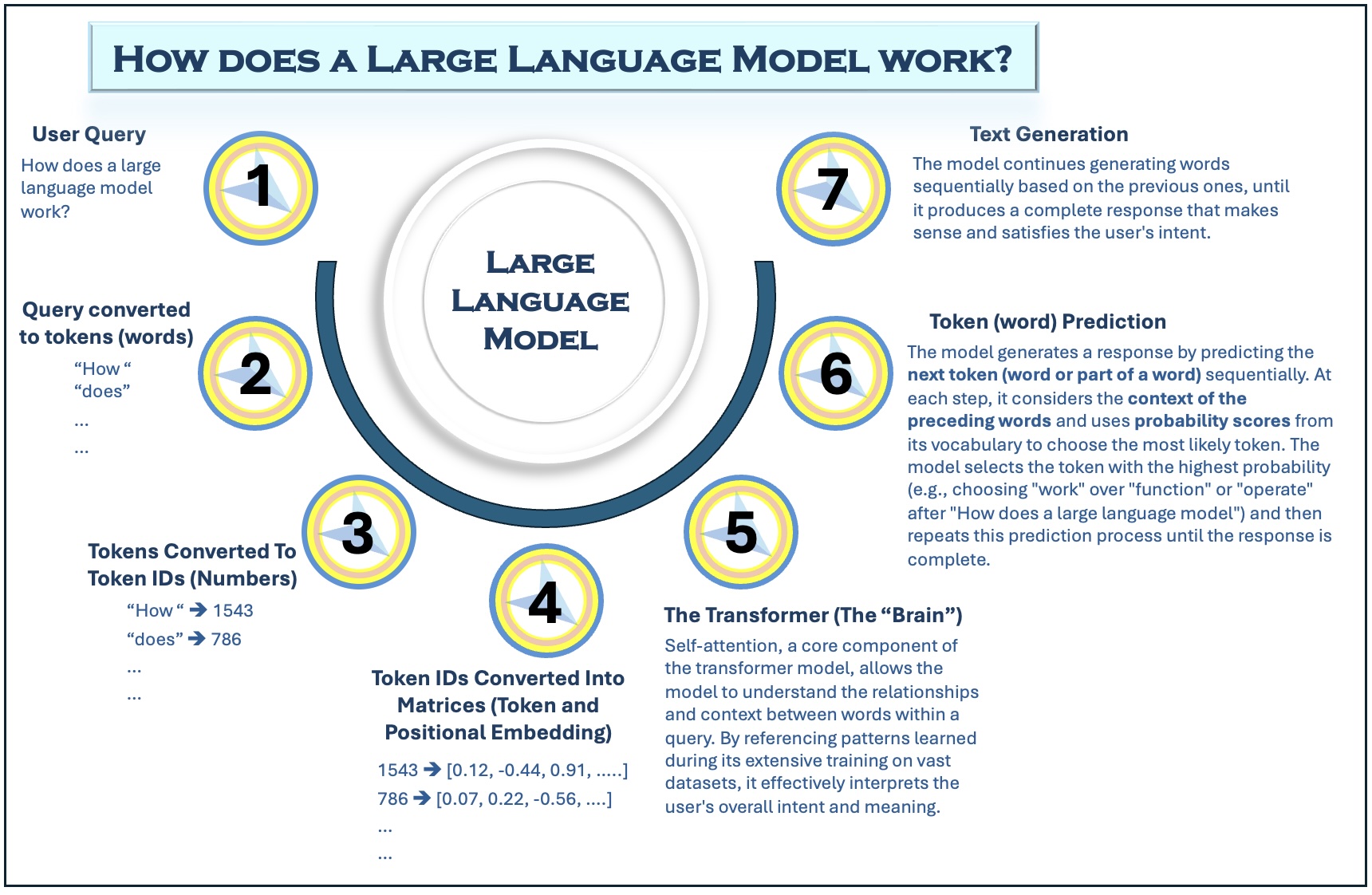

We use Large Language Models (LLMs) like ChatGPT and Gemini every day, but how does plain text go from a prompt to a coherent response?

Let’s break it down in plain language with a simple, 7-step flow diagram (see image below)!

The Core Idea: An LLM is a prediction engine. It doesn't understand words like a human; it understands mathematical representations of words (called Vectors).

To further simplify the inner workings of an LLM, let’s use a simple analogy from everyday life:

Consider a famous restaurant in your town, where a single chef works. He has been trained to prepare dishes from cuisines all around the world. He may not know how to make every dish with 100% authenticity, but he can prepare each one with about 80% accuracy thanks to his broad training.

- A guest orders a dish — Thai vegetable fried rice with no egg, no meat.

- The chef breaks the order into small actionable pieces, such as: “Thai,” “vegetable,” “fried,” “rice,” “no egg,” “no meat.”

- The chef assigns each ingredient a unique inventory code (e.g., rice-1543, vegetables-786) so he can work with them quickly and consistently.

- The chef measures each ingredient in grams and keeps them aside in separate bowls.

- The chef arranges and adds the ingredients in a predefined sequence. If the ingredients are not added in the correct order, the dish won’t turn out as intended.

- The chef predicts the next necessary ingredient or action needed to continue the recipe.

- He repeats this process—selecting and adding ingredients/actions—until the dish is complete and ready to be served.

I’d love to hear your thoughts—which step do you find most fascinating?

#AI #LLMs #GenerativeAI #TechExplainedInPlainLanguage #DeepLearning

Note: This is a simplified 7-step explanation of how LLMs work—there are many more fine-grained operations happening under the hood.